dstack

About dstack

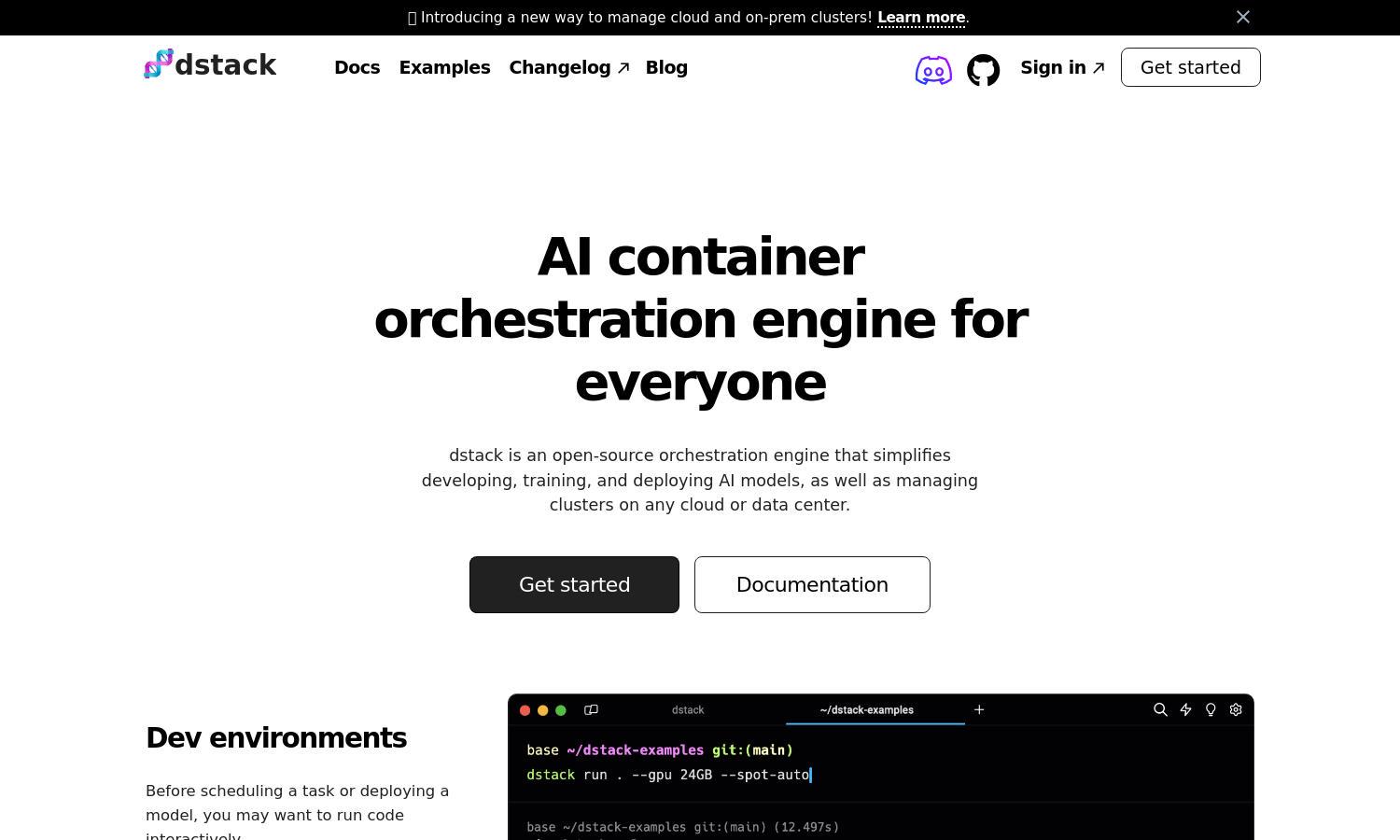

dstack is a revolutionary open-source AI container orchestration platform designed for AI engineers. It simplifies development, training, and deployment of AI workflows using cloud and on-prem servers. By handling resource management with ease, dstack allows teams to focus more on innovation than infrastructure.

dstack offers a flexible pricing structure primarily centered around open-source usage, which is always free. Users can self-host using their own cloud resources. For enhanced features and support, custom enterprise plans are available, providing significant value to teams scaling AI workloads efficiently.

The user interface of dstack is designed for simplicity and efficiency, allowing easy navigation through its features. With a clean layout and intuitive controls, users can quickly set up environments, schedule tasks, and manage services. dstack ensures a seamless experience tailored for AI development.

How dstack works

Users begin by signing up for dstack and setting up their environments with simple commands. After onboarding, the platform guides them through provisioning remote machines, scheduling tasks, and deploying services. With features tailored for AI workflows, navigating dstack feels effortless, enhancing productivity and resource management.

Key Features for dstack

Multi-cloud Support

dstack's multi-cloud support allows users to seamlessly integrate with top cloud providers and on-premise servers. This ensures flexibility in deploying AI workflows without vendor lock-in, significantly enhancing resource availability and cost-effectiveness in managing machine learning environments.

Task Scheduling

The task scheduling feature in dstack empowers users to efficiently manage and run jobs, whether for training AI models or deploying web apps. This functionality helps optimize resource allocation and workflow efficiency, making AI development faster and more reliable.

Auto-scaling Services

dstack provides auto-scaling services that enable users to deploy applications and models as endpoints that automatically adjust resources based on demand. This feature enhances application performance while ensuring efficient use of resources, crucial for AI-driven applications needing dynamic scalability.